Neural Networks

Activation Functions

Activation functions play a crucial role in characterising a neuron's behaviour. Whilst a step function may be used to construct universal networks at the theoretical level, it leads to bad performance or complicates calculations. In this section we will briefly look at different types of activation functions.

Signum function

Sometimes it is desirable that neuron's output be and instead of and – the two-way signum function is defined as

This function is helpful because it amplifies differences between input so that classification, for example, may be attained more quickly in some situations. Mathematically, this is a step function not the signum function, which is a three-way function defined as

Linear function

The third function we consider is the linear function

Being the simplest function possible does not make it useless, linear regression can be implemented using a very simple network with neurons having this activation function as we will see later in this chapter.

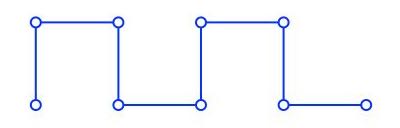

Piecewise linear function

A piecewise linear function may be used as an activation function and may be defined as follows

which can be regarded as an approximation to a nonlinear function. and can be replaced with appropriate . Some interesting properties of this function are:

- A linear combiner arises if the linear region is maintained without running into saturation.

- It reduces to a threshold function if the amplification factor of the linear region is made infinitely large.

Non-binary signals

For biological neurons the two binary values represent action-potential voltage and axon membrane resting potential. When applied to artificial neurons, these values are often labelled as and , respectively. In biological neurons, it is accepted that information is encoded in terms of the frequency of firing rather than merely the presence or absence of a pulse.

This situation may be modelled in two ways: 1) the input signal could range in the set of positive real numbers and 2) we could use a binary pulse stream as signals encoded as the frequency of occurrence of 's.

Continuous signals

The continuous signals work fine as inputs but they are suppressed by the step function. This could be overcome by the use of a squashing function. A very common family of functions to use is the sigmoid functions family. The sigmoid has the effect of softening the step of function. In other words, it could be regarded as the fuzzified values of the crisp value of the step function.

One convenient form of this function is

determines the shape of the sigmoid; a larger value makes the curve flatter. If is omitted, it is implicitly assigned the value of 1. This functionality is semi-linear. a is the slope parameter, which is as the origin. As approaches infinity, the function reduces to a threshold function. A neuron with such an activation function is called a semi-linear unit. The range of this function is . If a non-zero threshold is needed, the function could be defined as

These forms are called logistic functions.

Another form of the sigmoid is the hyperbolic function

which can be re-written as

The hyperbolic function's range is .

Binary pulse stream

Time is divided into discrete time slots if the signal level required is , where , then the probability of a pulse appearing at each time slot is . If the value required is in different range, the signal should be normalised to the unit interval.

In contrast to all previous functions, which are deterministic, the output is interpreted as the probability of outputting rather than an analogue signal. A neuron with such a functionality are known as stochastic semi-linear units.

If is unknown, an estimation may be made by counting the 's then , where is the number of time slots and is the number of pulses, i.e. the 's.

In the stochastic case, the sigmoid may be an approximation to the cumulative gaussian (normal distribution) and if so the model would fit a noisy threshold; that is, the threshold at any time is a random variable with gaussian distribution. Thus, the probability of firing if the activation is is the probability that the threshold is less than .

We can think of this pulse stream in a different way. The neuron only has two states low and high. The neuron fires probabilistically as follows. Let denote the state and the probability of firing, where is the integrated input signal, then

A common choice for is the sigmoid function defined as follows

where is a pseudo-temperature parameter used to control the noise level, i.e. the uncertainty of firing. As , the function becomes noiseless, i.e. deterministic, and reduces to the McCulloch-Pitts threshold function.